Today, the motive of my tutorial is to give you an idea of “Pure Storage Flash Array VMware Best practises” in a concise sheet.

As a storage administrator, it’s your duty (must obey religiously) to apply all best practices recommended by respective OEM (other equipment manufacturers) in your infrastructure.

Implementing best practise avoid issues, error and performance glitches which can impact your production environment hosting your critical business applications.

I have decided to write this article, as I have recently build Pure Flash array X90 R2 environment and read OEM’s pdfs to implement best practice. I have jotted them down for you in the table for your quick reference.

It will avoid all your reading and search hassles because I will give you short details of each parameter so that you can have a bit of clarity before implementing it.

If you are new to the storage world or don’t know about Pure flash storage, let me give you some idea.

What is Pure Storage Flash Array

Pure storage flash array is (100% NVMe and software-driven) product of Pure company, which is really growing very fast in the field of Flash storage. Gartner named Pure Storage as leading primary storage for the year 2019 in the magic quadrant.

Pure Storage is really a good product comprise of performance, scalability, simplicity, highly redundant and awesome vendor support. Its active-active cluster is also amazing and provides an option to host your mediator (Witness) in the Pure cloud as free, then you don’t need any 3rd site to host it.

Their Evergreen offering is also eye catchy and the way they provide Data reduction is also one of the milestones in the Enterprise storage industry as per my view.

Enough about Pure let’s direct talk about Recommended Pure settings –

Pure Storage Flash Array VMware Best Practise

I have created this table by reading and consolidating all parameters mentioned in long guides, pdfs and support articles of Pure Flash array. It will help you to quickly understand and implement it in your environment.

These are actually important must implement parameters in my view. These are latest till today (July 2020), I will keep an eye and update this table in future with all changes.

| ESXi Parameter | ESXi Version | Recommended | Default |

| HardwareAcceleratedInit | All | 1 | 1 |

| HardwareAcceleratedMove | All | 1 | 1 |

| VMFS3.HardwareAcceleratedLocking | All | 1 | 1 |

| iSCSI Login Timeout | All | 30 | 5 |

| TCP Delayed ACK (iSCSI) | All | Disabled | Enabled |

| Jumbo Frames (Optional) | All | 9000 | 1500 |

| DSNRO / Number of outstanding IOs | 5.x,6.x | 32 | 32 |

| Path Selection Policy | 5.x | Round Robin | MRU |

| IO Operations Limit (Path Switching) | 5.x | 1 | 1000 |

| UNMAP Block Count | 5.x | 1% of free VMFS space or less | 200 |

| EnableBlockDelete | 6.x and 7.x | 1 | 0 |

| Latency Based PSP (ESXi 7.0) | 7.x | sampling cycles – 16 latencyEvalTime – 30000 |

sampling cycles – 16 latencyEvalTime – 30000 |

| VMFS Version | 6.x and 7.x | 6 | 5 |

| Datastore and permanent device loss | All | Power off and restart |

Let me give you some detail about each option so that you can get a clear understanding of the parameters.

Parameter’s description

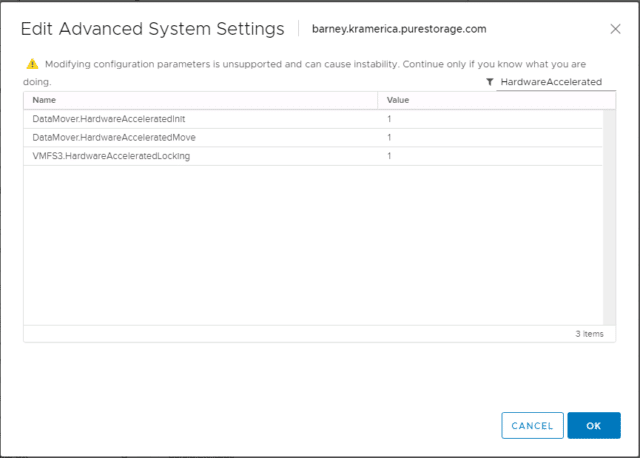

HardwareAcceleratedInit

This is one of the VAAI (vStorage APIs for Array Integration) feature that must be enabled. Disabling VAAI feature may greatly reduce the performance and efficiency of Flash array.

VAAI features are used to offload and accelerate few operations in Vmware infrastructure. These are set as 1 (enabled) by default, but a validation check is required.

HardwareAcceleratedInit feature enables and controls the use of zero blocks (Write Same) or SCSI (0xFE) commands specific to the storage vendor.

HardwareAcceleratedMove

Enables and controls use of XCOPY, this option is used to copy or migrate data within the array. The by-default option is 1, means enabled.

VMFS3.HardwareAcceleratedLocking

It enables and controls the Atomic Test & Set (ATS), which is used during file creation and locking on VMFS volume. It must be enabled (set as 1), disabling it may impact on array performance. All these VAAI features can be enabled from Vsphere web client or command line.

iSCSI Login Timeout

By default, ESXi set iSCSI login timeout as 5 seconds, which means ESXi will kill iSCSI session after 5 seconds, if it’s idle and try to re-login immediately.

It results in the “login storm” situation, due to the extra load on the storage array. Recommended value as per Pure documents is 30 seconds as it ensures iSCSI sessions survive controller reboots during maintenance or failures.

TCP Delayed ACK (iSCSI)

Congestion may occur when there is a mismatch of data processing capabilities between source and destination network elements and acknowledgement of every packet is delayed.

Congestion may result in timeout, delay and loss of packets. Pure suggests it to disable to improve performance in a congested network.

Jumbo Frames (Optional)

VMware allows sending Jumbo frames out of physical network if the end to end devices like network switches, Host adapters and storage supports the jumbo frame. By-default 1500 is the default value, but you can set it as 9000 to enable Jumbo frames.

It is an optional parameter, because it depends on your environment, whether Jumbo frames are supported or not. Keep in mind that, if jumbo frames are enabled then Delayed ack must be disabled to avoid retransmission of larger packets.

DSNRO / Number of outstanding IOs

Disk.SchedNumReqOutstanding (DSNRO) option means when two more Virtual machine uses the same LUN or datastore, DSNRO controls or manage to limit the number of IOPS that will be issued to the datastore or LUN.

The default value is 32 and Pure doesn’t suggest to change this parameter until some issue reported on outstanding IOs.

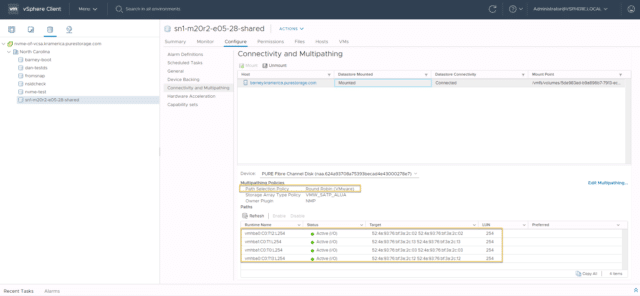

Path Selection Policy

Vmware uses Native multipathing plugin (NMP) to manage and load balance IOs. There are three types of policy Fixed, MRU (Most Recently Used) and Round Robin (RR).

Pure suggests using Round-Robin as path selection policy, to best utilize the active-active nature of Front-end of the Flash array. Round-Robin maximizes the performance by distributing IOs between all discovered FC or SCSI paths.

All latest ESXi version uses the RR as default path selection policy, but if you have some legacy version like 5.x, Just validate and change it from MRU to RR cautiously…

IO Operations Limit (Path Switching)

It is the threshold, how often ESXi will switch the logical path of IO’s. By default value is “1000″ and fine-tuning it to “1″ will really increase the performance and Pure highly recommends to do it.

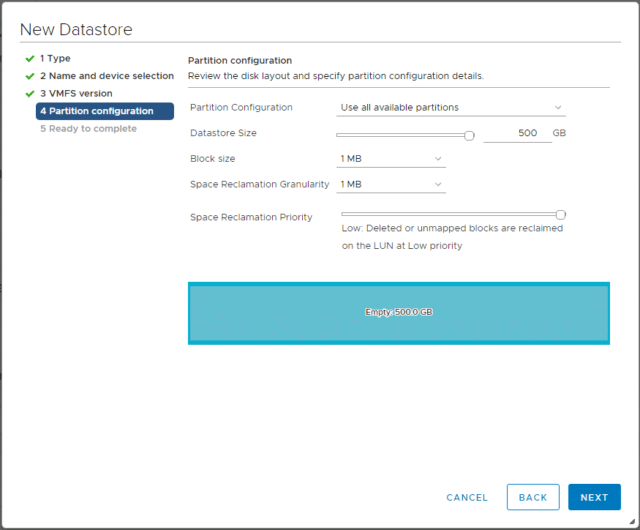

UNMAP Block Count

Space reclamation in case of thin provisioning differs depending upon the ESXi version, Pure suggests to set it “1% of free VMFS space or less” instead of Default value “200″. This is applicable if you have ESXi version 5.x.

From ESXi version 6.7, they have really fine-tuned space reclamation and added medium and high with the default option of low. Currently, Pure storage recommends using priority-based default low option as testing is still in progress and they may have some different recommendation in future.

EnableBlockDelete

Provides end-to-end in guest support for space reclamation (UNMAP) and applicable only to VMFS 5. The default value is 0 (Disabled), you need to set it as 1 (Enabled). If you are using VMFS 6 onwards, you don’t need to worry at all.

Latency Based PSP (ESXi 7.0)

It is a sub policy of Round Robin (RR) introduced from vSphere 6.7 U1 to make RR path selection policy more intelligent. It evaluates path every 30seconds to find out either any degraded path exists or monitors for any outstanding IOs.

It samples 16 IOs by default in every sampling cycles and makes an intelligent decision on which path to use and which to exclude dynamically.

VMFS Version

Pure suggest upgrading all your VMFS (Virtual machine File system) datastore from version 5 to version 6 to get advance file system features and to improve performance.

Datastore and permanent device loss

Permanent device loss (PDL) or All paths down (APD) is the situation when ESXi lost connection to Storage device.

Pure recommends to set it as “Poweroff and restart” so that your VM can be online if any intermittent fabric issue or DR (Disaster Recovery) happened due to unforeseen circumstances.

Final Words

In this post, I have tried to provide must enable Pure recommendations and summary of options at one place, so you don’t need to read bulky pdfs or documentation.

But if still, your thirst of knowledge pleading for more, Read these Pure Best practise documentation-